Dear fellow Turing-Tumble Enthusiasts,

After a lot of thought, I believe I’ve got a direct simulation of a Turing Machine by a natural extension of the Turing Tumble game, and a proof that the simulation is correct. Thus, I believe this proves that Turing Tumble is Turing-complete.

The “natural extension” is just to allow a finitely wide but infinitely long board (as we’ve all discussed) to represent and hold the contents of the Turing machine’s tape.

Because there is no bottom-of-board, the three different types of triggers (for the three ball colors used - this can likely be pared down to two with some work) can be placed anywhere. (The current construction uses an infinite number of triggers, but a more complicated and much less natural construction uses just finitely many (and more complicated, just two triggers))

Here https://docs.google.com/document/d/1-2qzJL158Ipfpk-WaeUmlRIYFvZTuBZhvNHZ0cs7i4w/ is a link to a draft of a paper containing a detailed description of the construction and the proof. I’d very much appreciate your keen eyes and suggestions. (It seems imprudent to allow anybody with the link to comment, the internet being what it is and all, but I’d appreciate discussion in reply to this post, or via email to lennypitt@gmail.com.) Please don’t message me on this forum - I tend to miss those.

After suitable modification, I’ll look for an appropriate recreational or other journal to submit the paper to, so that it gets “properly” peer-reviewed. I’ve used quotes because I trust that the audience here will understand the construction better than a random reviewer, especially one who hasn’t played extensively with Turing Tumble. Why submit it then? I’d like to have the proof also carefully reviewed. Often one finds errors in a method or solution only when one tries to prove it correct. This happened in this case a few times - I thought I had everything down pat, then was half-way through a proof when I said “hmmm - but I need to show thus-and-so”. But alas, “thus-and-so” was not true for some subtle reason, and back to the drawing board…)

So, I think the exposition will benefit enormously from having different audiences look it over. (Suggestions on where to send it to would be welcome.)

Of course, there may be subtle (or not-so-subtle) flaws in the construction, or the proof, or both. So, I ask you to help me determine if that is the case, or otherwise improve the exposition, and if this doesn’t in fact work, how can it be modified so that it will?

While there are a lot of details and nuance, the high level ideas are not hard. I thought I’d share them here in a narrative to give an idea of what was done, without having to delve into minutiae. I hope the following makes sense - I’ve lost a lot of perspective, and this is partly stream-of-consciousness. The paper itself hopefully contains a lot of this intuition.

A finite control sits at the top of the board, and is responsible for keeping track of the current state of the TM, and executing the state-changing function.

This is fairly routine: a ball falls down a branching tree to determine what state the TM is in (stored in a gear bit), then based on the current symbol read, it changes the state by updating the gear bits holding the state information. Of course, how does it know the current symbol being scanned by the TM, especially when that symbol may be arbitrarily far away down the board? More on that below.

The “tape” consists of an infinite sequence of “3-cells”, each of which represents one tape cell of the TM. A 3-cell contains 3 bits of information: The current symbol S on the tape at the cell (0 or 1), whether or not the tape head is scanning the cell (call this bit h (= 0 no, 1 yes)), and also a bit p that keeps track of whether or not the tape head is scanning the PREVIOUS cell. The point of p is to “defy gravity”…

It should be intuitive that if the TM moves right (down), the simulation shouldn’t be so hard, because balls travel downward, and we can use a ball to, say, change the h-bit of 3-cell n to 0 while then changing the h-bit of 3-cell n+1 to 1, thus asserting that the scanning head has moved from tape cell n to tape cell n+1. But how to pass that information upward? That is what the third bit p is for. The bit p is linked via a gear chain back up to h of the previous 3-cell. This allows us to “move” the head back up one cell. Here, as well as everywhere, a challenge lurks in avoiding intersecting gear chains.

(In an earlier construction, each 3-cell also contained an “n” bit, which kept track of whether or not the tape head was in the next cell.)

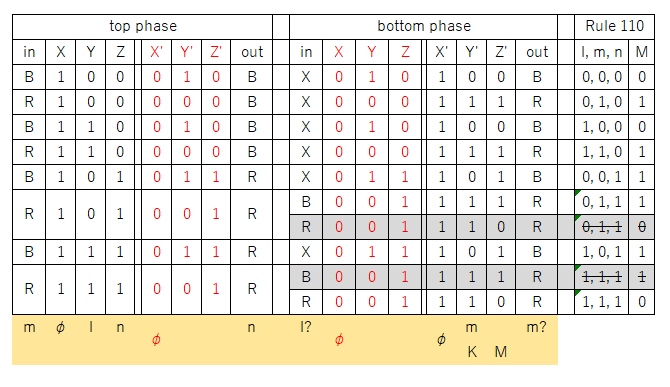

The key question though that stumped me for a long time is how does the finite control get information to and from the tape? In particular, passing information from the tape UP to the finite control seems near impossible without infinite gear chains. Then I had the insight that now seems like “well, duh”: A triggered ball could pass information. Rather, WHICH ball that is triggered is information itself. So, a 3-cell can “tell” the finite control that the symbol being scanned at that cell is a 0 or 1, by triggering the 0-ball or the 1-ball. (There are three “colors” of balls: 0, 1, and Q (for “query”)).

Thus, which hopper a ball comes from tells the finite control what symbol is being scanned, allowing it to correctly change its state.

How to get that to happen was solved by having 2 balls fall per step of the TM. The first is a Q-ball (query), which goes down the tape looking for an h=1 bit in a 3-cell. When it is encountered, that tells that 3-cell to trigger a 0-ball or a 1-ball depending on what the current symbol at that 3-cell is.

Then the finite control can take over, and change the state, but now it also needs to tell that cell below what symbol to write, and whether to move the tape head left (up) or right (down).

The second key insight is that there are really only four possible messages to be passed down to the cell:

L0: Write 0, move head to the left

L1: Write 1, move head to the left

R0: Write 0, move head to the right

R1: Write 1, move head to the right.

So, the finite control sends the ball down one of four “lines” in the “bus”, corresponding to these four messages.

These lines pass down alongside the 3-cell tape, “looking” at the h-bits. When h=1 is encountered, the ball diverts “into” the 3-cell, where the S bit is written as 0 or 1, and where either the p bit is changed (set previous cell’s h-bit to 1, indicating that the head moves up), or to send the ball down a ways and change the h bit of the next 3-cell.

There are a bunch of other details, but these are the main ideas.

I hope this made some kind of sense. I’ve been thinking about this a lot, immersed in the writing and proof, and don’t trust what sounds coherent or incoherent at this point.

I look forward to hearing your comments.

Edit - one day later - I’ve added a new section to the paper that gives the high level ideas on how instead of using an infinite number of triggers, a variation of the construction can use just a single one, not far from the top hopper, but it uses two infinite gear chains running down the board to allow the tape cells to communicate with the finite control. Not sure which is preferable. (Yet another variation in an older version uses finitely many triggers, and no infinite gear chains, but seems like cheating in that each cell encodes the finite control of the TM.)

Edit (sixteen days later) - one can eliminate the infinite gear chains by having information move upward via interlocking “c” shapes, one step at a time for each ball that falls. This way, a computation can send a signal upward, that arrives arbitrarily far above, eventually.

The paper, now at tinyurl.com/ttistcomplete is mostly done.

Finally - is this thread dead? I was expecting some discussion arising from my post, but perhaps people have moved on after three years